ISSN: 0970-938X (Print) | 0976-1683 (Electronic)

Biomedical Research

An International Journal of Medical Sciences

Research Article - Biomedical Research (2018) Volume 29, Issue 10

Efficient oppositional based optimal Harr wavelet for compound image compression using MHE

1Department of Computer Science, Bharathiyar University, Coimbatore, India

2Mar Ephraem College of Engineering & Technology, Malankara Hills, Elavuvilai, Marthandam, India

- *Corresponding Author:

- Image compression

Optimization, Wavelets,

Encoding, Digital compound images and transformation and Huffman coding.

Accepted on April 09, 2018

DOI: 10.4066/biomedicalresearch.29-18-501

Visit for more related articles at Biomedical ResearchImage compression is to reduce the size in bytes of a graphics file without corrupting the nature of the image to an unsatisfactory level. The compound image compression ordinarily in view of three classification strategies that is object-based, layer based along with block based. The corruption of compound image or report compression depends on the capacity and transmission of the files. This proposed model has two methods utilized for compression procedure that is optimal harr wavelets and Merging Huffman Encoding (MHE) process. Discrete Wavelet Transform (DWT)-Harr wavelet coefficient optimization analysis encouraged Oppositional based Grey Wolf Optimization (OGWO). Then the compression strategy module based encryption is done. From the outcomes accomplished the highest Compression Ratio (CR) for a compound image that is 97.56% compared with existing systems and furthermore assessed Peak signal to noise ratio (PSNR), Mean square Error (MSE), and Compression Size (CS) of all testing images.

Keywords

Image compression, Optimization, Wavelets, Encoding, Digital compound images and transformation and Huffman coding.

Introduction

The measure of data in the web is quickly expanding with the promotion of the World Wide Web. Images attributed to a generous measure of the web data [1]. Along these lines, it is worthwhile to pack an image by putting away just the basic data expected to recreate the image. An image can be thought of as a matrix of pixel (or force) values and with a specific end goal to compress it, redundancies must be misused. Image compression is the general term for the different algorithms that have been created to address these issues [2]. Compound images are outstanding amongst other methods for representing data [3]. Data compression is the decrease or disposal of excess in data portrayal so as to accomplish reserve funds away and correspondence costs [4]. A wavelet-based image compression framework can be made by choosing a sort of quantizer, wavelet work, and measurable coder [5].

It additionally diminishes the time required for images to be sent over the internet or downloaded from web pages. There are a few diverse routes in which image records can be compacted [6,7]. Lossless coding does not permit high compression extends though loss fulfil high compression proportion. Image compression is the utilization of Data compression on modernized image [8]. Contingent upon the algorithm utilized, documents can be incredibly decreased from its unique size. Compression is accomplished by applying a linear transform, quantizing the subsequent transform coefficients also entropy coding the quantized esteems [9]. Recently, many investigations have been made on wavelets. This paper exhibits a great review of what wavelets have conveyed an application to the fields of image processing [10,11]. Additional methods for image compression involve the utilization of fractals as well as wavelets. These strategies have not increase regular endorsement for use on the Internet as of this content [12].

It has a lot of focal points that suit for image compression, for example, acceptable execution even at low bit rates, a region of interest coding, great constant, straightforward operation and simple SOC integration and so on [13,14]. The objective of such a loss compression strategy is to maximize the overall compression proportion is to compress every locale independently with its own compression proportion, contingent upon its textural noteworthiness, to protect textural qualities [15]. The compressed record is initially decompressed and after that utilized. There are much programming's utilized to decompress and it relies on which kind of document is compacted [16,17]. Subsequently, image compression procedures that can ideally compress different sorts of image content are basic to lessen the image data blast rate on the web. Be that as it may, the generally supported image organizes on the Web are restricted to JPEG, GIF, and PNG. The execution of hardware level compression of raw image data in the runtime is additionally being vigorously inquired about for medical image processing [18].

Literature Review

As of late, utilizing Compressive Sensing (CS) as a cryptosystem has attracted consideration because of its compressibility and low-multifaceted nature amid the testing procedure by Hu et al. in 2017 [19]. In any case, when applying such cryptosystem to images, how to ensure the protection of the image while keeping effectiveness turns into a test. They have proposed a novel image coding plan that accomplishes consolidated compression and encryption under a parallel compressive sensing structure, where both the CS was examining and the CS remaking are performed in parallel. Thusly, the effectiveness can be ensured. Then again, for security, the imperviousness to Chosen Plaintext Assault (CPA) is acknowledged with the assistance of the collaboration between a nonlinear chaotic sensing matrix development process and a counter mode operation. Besides, the deformity of vitality data spillage in a CS-based cryptosystem is additionally overwhelmed by a dissemination system. Test and investigation result demonstrated the plan accomplishes viability, productivity, and high security concurrently.

The Discrete Wavelet Transforms (DWT) and Singular Value Decomposition (SVD) by Agarwal et al. in 2014 [20]. The curious estimations of a binary watermark were implanted in particular estimations of the LL3 sub-band coefficients of the host image by making utilization of Multiple Scaling Factors (MSFs). The MSFs were enhanced utilizing a recently proposed Firefly algorithm having an objective work 28 which is a linear combination of subtlety and strength. The PSNR esteems demonstrated that the visual nature of the marked and assaulted images is great. The embedding algorithm was powerful against regular image processing procedures. It was inferred that the embedding and extraction of the proposed algorithm are very much optimized, hearty and demonstrate a change in other comparative detailed techniques.

In 2017 Zhang et al. [21] had proposed an image compressionencryption hybrid algorithm in examination sparse model. The sparse portrayal of the first image was attained with over completes fixed word reference that the request of the lexicon particles was mixed and the sparse portrayal can be considered as an encrypted rendition of the image. In addition, the sparse portrayal was compacted to decrease its measurement and reencrypted by the compressive sensing at the same time. To upgrade the security of the algorithm, a pixel-scrambling technique was utilized to re-encode the estimations of the compressive detecting. Different reproduction outcomes confirm that the proposed image compression-encryption hybrid algorithm could give an extensive compression execution with a decent security.

Embedded Zero tree Wavelet (EZW) is a proficient compression technique that has points of interest in coding by Zeng et al. in 2017 [22] however its multilayer structure data coding decreases signal compression proportion. This paper examined the optimization of the EZW compression algorithm and intends to enhance it. Initially, they utilized lifting wavelet transformation to process electrocardiograph (ECG) signals, concentrating on the lifting algorithm. Second, they used the EZW compression coding algorithm, through the ECG data deterioration to decide the element identification esteem. At that point, as indicated by the component data, weighted the wavelet coefficients of ECG (through the coefficient as a measure of weight) to accomplish the objective of enhanced compression advantage.

In 2016 Karimi et al. [23] proposed that the special purpose compression algorithms and codes could compress assortment of such images with better execution analyzed than the broadly useful lossless calculations. For the medical images, numerous lossless algorithms have been projected up until now. A compression calculation involves diverse stages. Before planning an extraordinary purpose compression technique they have to know how much each stage adds to the general compression execution so they could as needs be put time and exertion in outlining distinctive stages. With the intention of comparing and assessing this multi-organize compression system, they outlined more productive compression techniques for huge data applications.

Chang et al. in 2016 [24] had presented an image classification method which initially removes rotation invariant image texture elements in Singular Value Decomposition (SVD) along with DWT areas. Along these lines, it abuses a Support Vector Machine (SVM) to perform image texture classification. These methodologies are called as SRITCSD in short form. In the first place, the strategy applies the SVD to upgrade image surfaces of an image. At that point, it removes the texture elements in the DWT area of the SVD variant of the image. Likewise, the SRITCSD strategy utilizes the SVM to fill in as a multi-classifier for image texture components. In the interim, the Particle Swarm Optimization (PSO) algorithm is used to improve the SRITCSD strategy, which was misused to choose an almost ideal blend of features and an arrangement of parameters used in the SVM. The test outcomes exhibited that the SRITCSD strategy can accomplish agreeable outcomes and beat other existing strategies under contemplations here.

The composed compressor works specifically on data from CMOS image sensor with the Bayer Color Filter Array (CFA) by Turcza et al. in 2016 [25]. By ideal combining transformbased coding with prescient coding; the proposed compressor accomplishes higher normal image quality and lower bit rate when contrasted with fundamentally more mind-boggling JPEG-LS based coding plans. For commonplace WCE images, the normal compression proportion is 3.9, while the PSNR is 46.5 dB, which implies that high image quality is accomplished. The outlined image compressor together with 1 kb FIFO (First-In, First-Out) stream cushion and a data serializer was actualized in Verilog and combined with the UMC 180 nm CMOS prepare as an Intellectual Property (IP) hub. Coordinated FIFO and data serializer encourage minimal effort interface to an RF (radio recurrence) transmitter. The silicon range of the center is just 0.96 × 0.54 mm. The IP core,working at clock recurrence as low as 5.25 MHz, can handle 20 fps (outlines every second).

Problem Identification

• Run Length Encoding (RLE) compression is just proficient with documents that contain plenty of redundant data. White or dark regions are more reasonable than the colored image [17].

• It possesses more storage gap that is not the ideal compression proportion. LZW algorithm works only when the input data is adequately vast and there is adequate excess in the data [24,26].

• Arithmetic coding has difficult operations since it comprises of additions, subtractions, multiplications. Arithmetic coding altogether slower than Huffman coding, there is no endless accuracy.

• Code tree should be transmitted and also the message, that is unless some code table or forecast table is settled upon amongst sender and recipient [9].

• Fourier based transforms (e.g. DCT) are effective in abusing the low-frequency nature of an image. The highfrequency coefficients are roughly quantized; furthermore subsequently the recreated nature of the image at the edges will have low quality.

• High MSE is calculated by lower Compression Ratio.

• DCT-based image coders execute exceptionally well at direct bit rates, higher compression proportions, and image quality debases on account of the ancient rarities ensuing from the square based DCT method [14].

Methodology

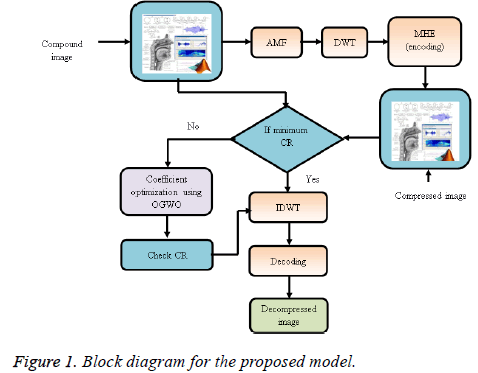

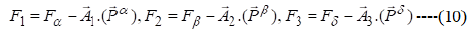

Image compression is the utilization of data compression on computerized images. In actuality, the goal is to reduce redundancy of the image data sequentially have the capacity to store or transmit data in an effective frame. The vast majority of the outwardly remarkable data about the image is moved in a couple of coefficients of the DWT. Primarily, the compacted image is same as unique and in second expelled some irrelevant information from the image. At first, consider the compound image for pre-processing steps at that point apply DWT to change image pixel values to spatial space to the frequency domain. For enhancing this process optimizes DWT Coefficients with help of inspired optimization techniques. For the purpose of compression, the encoding method is utilized to encode the image. In this study, the MHE is used; after that finds the compression ratio for original and encoded image. The DWT is an effective technique to signify data with fewer quantities of coefficients. At that point, the normal compression can be threefold. When preparing this progression and developing standards for digital image communication, one needs to ensure that the image quality is maintained or improved. Correlation between the proposed technique and different ones to quantify the performance has been applied. Consequently, getting the base compression ratio decoding procedure will be applied to recreate the image with help of inverse DWT (IDWT). Figure 1 demonstrates the proposed block chart of image compression process.

This image compression research model considered below mentioned modules:

• Compound image module.

• Pre-processing module.

• DWT module.

• Optimization module.

• Compression module.

Compound image module

Compressing compound images with an individual algorithm that concurrently assembles the prerequisites for text, image and designs has been tricky and therefore necessitates new algorithms that can capability lessen the file size without debasing, the quality. As per, each of the image data types displays distinctive attributes and best compression execution of such images can be accomplished when diverse compression strategies are utilized for each of these image data types.

Pre-processing module

Image pre-processing is one of the preliminary strides which are very required to guarantee the high exactness of the consequent strides. This procedure delivers a rectified image that is as close as conceivable qualities of the original image. This compound image compression model the Adaptive Median Filter (AMF) is considered.

AMF

The filtering is like an averaging filter in that each yield pixel is placed with an average of the pixel values in the area of the relating input pixel. Nonetheless, with filtering, the estimation of an output pixel of an image is dictated by the median of the neighbour pixels instead of the mean. The median is a great deal less responsive than the mean to colossal values. The adaptive median filter depends on a trans-conductance comparator, in which saturation current can be adjusted to go about as a neighbourhood weight operator. Exchanging median filter is utilized to accelerate the procedure, in light of the fact that exclusive the noise pixels are filtered.

Discrete wavelet transform (DWT)

The DWT signifies the signal in powerful sub-band decomposition. Generation of the DWT in a wavelet parcel permits sub-band investigation without the requirement of dynamic deterioration. DWT in view of time-scale interpretation gives productive multi-determination sub-band decay of signals. It has turned into an effective instrument for signal processing and locates various applications in different fields, for example, sound compression, pattern acknowledgment, texture separation, computer designs and so on. This paper considers Harr wavelet for the transformation process. These functions are created by the interpretation and enlargement of an extraordinary function, known as the “wavelet mother” meant by δ(t) and is given by equation (1).

Harr wavelet process: Haar wavelet compression is a productive approach to execute both lossless and loss image compression. It depends on averaging and differencing esteems in an image matrix to create a matrix which is inadequate or almost meagre. A meagre matrix is a matrix in which a substantial part of its entrances are 0. Discrete Haar functions might be characterized as functions controlled by examining the Haar capacities at 2 n focuses. These functions can be helpfully spoken to by methods for matrix shape. The Haar networks H (n) are considered in the normal and grouping requesting which vary in the requesting of lines. The Haar wavelet's mother wavelet function δ(t) can be depicted as:

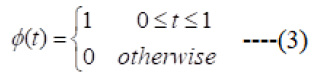

It's scaling function ϕ(t) can be described as:

Haar wavelet transform has a number of advantages:

• It is adroitly basic and speedy.

• It is memory proficient since it can be ascertained set up without a brief cluster.

• It is precisely reversible without the edge impacts that are an issue with other wavelet changes.

• It gives high compression ratio and high PSNR

• It expands detail in a recursive way.

For improving compression ratio of proposed model optimise Harr wavelet coefficient using opposition based Grey Wolf Optimization (OGWO) technique.

Optimization module

The target of this segment is to choose the ideal coefficient utilizing Gray Wolves Optimization algorithm. Generally, the filter bank is a transform device that is used to expel or decrease spatial redundancies in image coding applications. To accomplish superior exhibitions, this application requires filter saves money with particular elements that vary from the traditional Perfect Recreation (PR) issue.

Grey wolf optimization (GWO)

The GWO is utilized to determine various optimization issues in different fields and decidedly gives exceptionally focused solutions. The algorithm is modest, hearty, and has been used in various difficult issues. The GWO is intense for the sake of exploration, misuse, neighbourhood optima evasion, and convergence. However, its aptitude is still to some degree reliant or limited on a portion of the components to be determined by exploration and misuse.

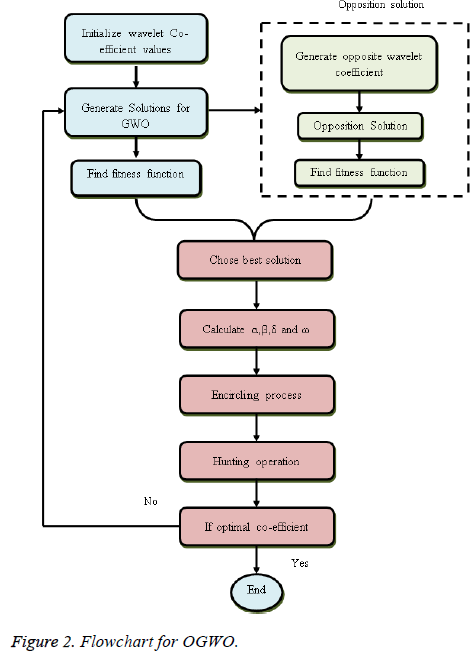

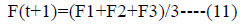

Opposition based grey wolf optimization (OGWO)

A population is framed by a gathering of result haphazardly. This strategy difference certification is utilized to create the evaluation of the fitness task in aerating and cooling plan. The outcomes in the population are consequently expected and the appraisal task is displayed by the consumer. Two outcomes are chosen depending on their fitness, the more prominent fitness, and the lifted possibility of being chosen. These outcomes are used to redevelop at least one issue. In this manner that the issues are adjusted arbitrarily and furthermore the redesign procedure additionally transformed depend only re-build up the outcome. The well-ordered procedure of proposed OGWO algorithm based ideal wavelet coefficient selection process is clarified beneath and furthermore, flow graph appeared in Figure 2.

Step 1: Input solution generation.

The early illuminations are utilized to create self-assertively and each result is recognized as a gene. The substance gene is joined as chromosome and it is specified as the solution set. The contention based genetic algorithm is various from the normal gray wolf and registers the contention chromosome result actualized by the beneath equation.

Step 2: Initialization.

The arrangement encoding is the vital procedure of the optimization algorithm. In this work, we improve the filter coefficient of the input images. Every agent comprises of low pass filter disintegration coefficients, high pass filter deterioration coefficients, low pass filter recreation coefficients, and high pass filter reproduction coefficients. Here, we haphazardly relegate the filter coefficient esteems. These ideal esteems are utilized to part the image into subgroups. The frequency bands are relying upon the scope of the coefficient esteems. Instate the input parameters, for example, hidden layer and neuron is an underlying arrangement and ‘i’ is various arrangements this procedure is known as initialization process.

Step 3: Fitness evaluation.

The procedure of fitness calculation is applied in the condition (4) for to discover the fitness for the individual solution, and this procedure is processed as takes after:

Fitness=max(CR)----(4)

CR=(Compressed file size/Actual file size)----(5)

In view of the equation (4), a definitive objective is the maximization of an objective function CR which is profoundly relied upon filter coefficients.

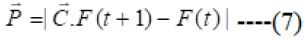

Step 4: Updating α, β, δ and ω.

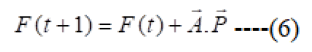

Therefore, the second and the third best arrangements are named as beta (β) and delta (δ) independently. The rest of the candidate arrangements are respected to be the omega (ω). In the GWO technique the hunting (optimization) is guided by α, β, δ and ω. By the solution, can gather the initial three finest resolutions accomplished up until this point and require the other finding operators (counting the omegas) to adjust their circumstances in light of the finest search agent. This operator updating procedure considers underneath condition.

To finding, the coefficient vectors use equation (7).

A=2a.r1-a, C=2.r2----(8)

Where t remains for the present iteration, A and C stands for the coefficient vectors, P is the area vector of the quarry and speaking to the area vector of a gray wolf. The device of are directly lessened from 2 to 0 over the course of iterations and r1, r2 are subjective vectors in (0, 1).

Step 5: Hunting process.

The alpha (best applicant solution), beta and delta have the upgraded data about the potential area of the prey to imitate numerically the hunting behaviour of the gray wolves. As a solution, we store the main three best outcomes accomplished up until this point and need the other hunt operators (counting the omegas) to rehash their positions rendering to the position of the best search agent.

Step 6: Attacking prey and search new prey solution.

The gray wolves complete the hunt by assaulting the prey when it quits moving. With declining A, half of the iterations are committed to exploration (|A| ≥ 1) and the other half are dedicated to abuse (|A|<1). The GWO has just two principal parameters to be adjusted (A and C). However, we have held the OGWO algorithm as straightforward as possible with the littlest operators to be adjusted. The methodology will be managed to the point that the greatest number of iteration is achieved.

Step 7: Termination process.

The algorithm ends its execution just if the most extreme number of iterations is accomplished and the solution which is holding the best fitness esteem is chosen and it is indicated the best coefficient estimation of the image. Once the best fitness is accomplished by methods for GWO algorithm, the selected solution is given to the image.

Huffman encoding process

Huffman Coding Technique is a strategy which takes a shot at the two data and image for compression. It is a procedure which generally done in two passes. In the first pass, a statistical model is going excessively assembled, and after that, in the second pass, the image data is encoded which is created by that statistical model. These codes are of variable code length utilizing an essential number of bits. This thought causes a lessening in the average code length and in this manner general size of compacted data is littler than the first.

Step 1: Read the image on to the workspace of the Matlab

Step 2: Change the given color image into a gray level image.

Step 3: Probability of images are organized in diminishing request and lower probabilities are combined and this progression proceeds until the point that lone two probabilities are left and codes are relegated by deciding that; the most astounding plausible image will have a shorter length code.

Step 4: Advance Huffman encoding is performed i.e. mapping of the code words to the comparing images will bring about a packed data.

Merging based Huffman encoding (MHE)

At that point, the compressed image will be encoded later on steps. MHE algorithm is finished utilizing three stages:

• Creating Huffman code for original data.

• Code conversion based on conditions.

• Encoding.

(a) Creating Huffman code for original data: Above all else, the probabilities of the symbol ought to be organized in sliding request. Utilizing these images, consider the two most reduced probabilities as PX and PY. Make another node by utilizing these two probabilities as branches and the new node will be the arithmetic sum entirety of these two probabilities. This procedure ought to be rehashed utilizing the new code until the point when just a single node left. Every upper part and lower individual from each combine ought to be specified as "0" and "1" or the other way around. The code for each of the first image is resolved while navigating from the root node to the leaf of the tree by taking note of the branch name of every node.

(b) Code conversion based on conditions: The code transformation should be possible plainly in the wake of generating the Huffman code for the first data. The procedure is as per the following: Initially, the first data and its code word will be taken. Utilizing this code conversion process is finished by consolidating the two symbols (i.e.) the number of times the chosen blend of two images is rehashed. At that point, the combining procedure will be done in view of a few criteria.

• In the first place, the combining procedure can be connected to the chosen images when it fulfils the accompanying condition (i.e.) if the chosen image is rehashed for more than two times. At that point, it fulfils the condition and qualified for the combining procedure.

• Second, from the selected contest there ought not to be the same mix of the primary digit of the pair.

• Third, the bit length of the primary position of pair ought to be lesser than the bit length of the second position of the pair.

• If the above three conditions are satisfied and the first position of the symbol is repeated as twice, then the new pair should be replaced for the selected pair or old pair.

• Similarly, the above process is repeated for all selected code words.

(c) Encoding process: The encoding methodology is done on the premise of the amalgamation of image utilized as a part of code transformation in light of few conditions and consolidating. The procedure is as per the following: at first, the code change process is to be checked to choose whether the code shaped with the assistance of code transformation process is to be measured or not. At that point, the over three conditions are connected to check every image so as to encode the first data. After this confirmation, a code is framed for the first data. The last code is the encoded data in light of consolidating based Huffman encoding strategy.

Example for MHE

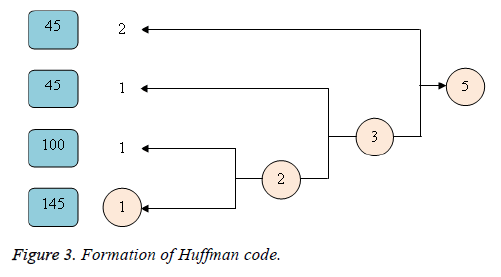

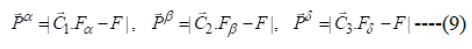

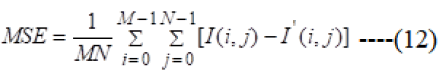

Let us assume frequencies esteems as (45) (85) (100) (145) (45) is an original data and from this original data, the Huffman code is shaped for every image in the first data. The arrangement of the code appears underneath in Figure 3.

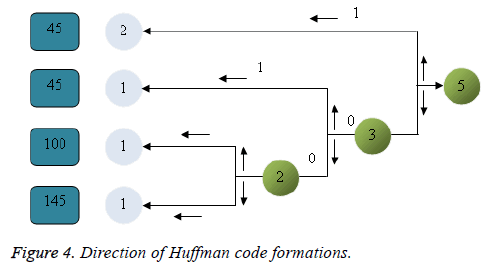

Figure 4 is clarified as trails; primarily the repetitive letters are considered for one time and denote the frequency of the letter in the original data. (i.e.) the unsigned number value "45" speak to that the symbol "45" is rehashed three times in the first data. Hence, two images with slightest frequencies are taken and relegate 0 and 1 to it and speak to the aggregate recurrence of two images underneath it. Additionally, this procedure is finished by taking the following two images and allocates ones to the point that the last stride.

The Huffman code is then molded for every symbol by methods for considering the relating branches of ones from the last stride to the first. From Figure 1 the Huffman code shaped for the symbol "45" is 1; the Huffman code for the symbol "85" is 01; the Huffman code framed for the symbol "100" is 001; the Huffman code for the symbol "145" is 0001; Then the Huffman code for the original data '((45) (85) (100) (145) (45) is "10100100011" (Table 1).

| Actual data (Probability’s) | 45 | 85 | 100 | 145 | 45 |

| Modified | 45 | 85 | 100 | 145 | 45 |

| Compressed | 1 | 1 | 1 | 1 | 1 |

Table 1. After code conversion process.

In the wake of generating the Huffman code for each letter, the code transformation in view of the condition is finished by orchestrating the minimum length letters first. The code transformation in light of combining is utilized to pack the data. From the original data consider the recurrence blend of two symbols first and the consolidating procedure is connected just to the fulfilled mix. In view of the blending conditions, at to start with, the mix of the symbol '(45) is rehashed no less than two times that met all requirements for merging process. Secondly, the pair that same mix of the first digit of the selected pair (45)' that ought not to rehash. Thirdly, the bit length of the main position of the match (1) should lesser than the bit length of the second position of the pair (01). At long last, the MHE based encoded data for the original data' Table 2 demonstrates the symbols with its code after the code conversion process.

Benchmark in image compression is the compression ratio. The compression ratio is utilized to quantify the capacity of data compression by the analyzing the extent of the image being packed to the measure of the original image.

Result and Discussion

This segment talked about the image compression utilizing wavelet and encoding system examined in the preceding section is actualized. The execution of the image is tried under different images and its outcomes are contrasted with the existing technique.

Experimental setup

This proposed image compression has been actualized in the working stage of MATLAB (variant R2015a). Our procedure is executed on a windows machine having arrangement processor® Dual-center CPU, RAM: 1 GB, Speed: 2.70 GHz and the operating system is Microsoft Window 7 professional.

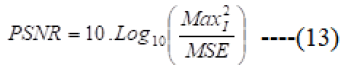

Database description

This compression investigation considers compound images gathered from the web, these images having text, graphics, animation, Scanned image and so forth. Test database appeared in Figure 5.

Evaluation metrics

Compression ratio (CR): The compression ratio is defined as the ratio of the size of original image and size of the compressed bits stream. The CR equation is represented in equation 5.

Compression size (CS): Compression size, as well as remarkable size, is indicated as the amount of time important to compress and decompress an image, correspondingly.

Mean square error: MSE is the distinction among values suggested by an estimator and the true estimations of the amount being evaluated. MSE screens the mean of the squares of the "errors." the sum by means of which the value masked by the estimator, contrasts from the amount to be evaluated is the error.

Peak signal to noise ratio (PSNR): The peak signal to noise ratio (PSNR) is calculated using the subsequent equation;

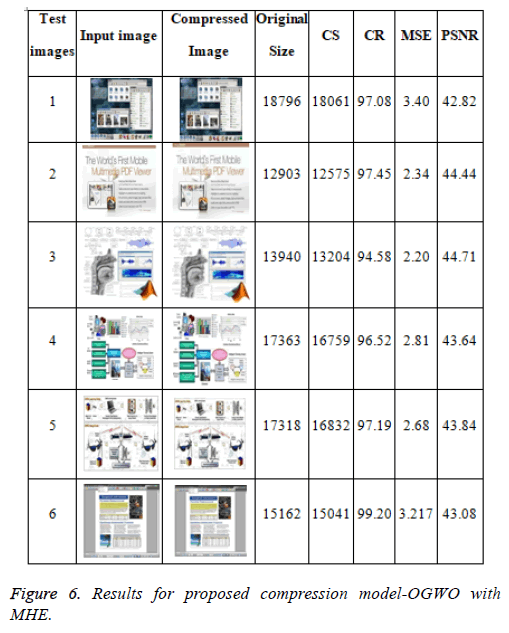

Figure 6 shows the extraordinary image, decompressed image, and compression extent for disparate compound images, the whole image records are inspected in disparate volumes. The compression extent of the anticipated algorithm is recouped than that of our possible algorithms. In addition to the outcomes, this algorithm is equipped to diminish colossal images that JPEG unsuccessful to compress. DWT Coefficients OGWO optimization method, Greatest PSNR is 31.69 in images 3 the MSE of that image is 2.4035 and file compression volume difference is 1300 alike dissimilarity is further images.

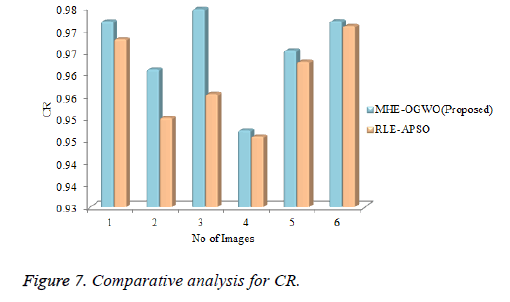

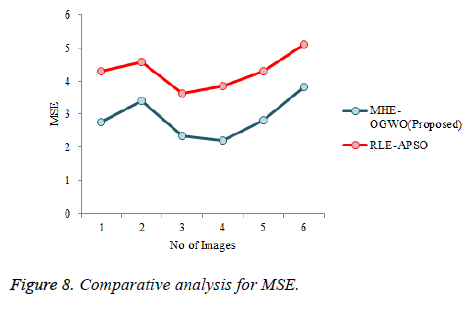

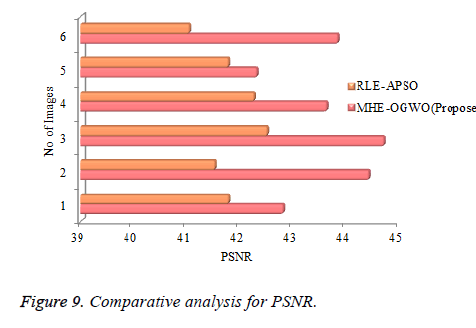

Figures 7-9 demonstrate the PSNR, MSE, and CR in the proposed strategy and also compares to RLE-APSO and MHEOGWO procedures in image compression procedure. The projected MHE-OGWO compression process has acquired last esteem 97.2 appeared in Figure 5 it's contrasted with different methods the difference is 0.45% in existing procedure. Image 2 is compared with image 3 the MHE-OGWO method 92.2 it's contrasted with other system distinction is 0.04%. Figure 9 demonstrates the PSNR examination of proposed and different strategies, the most extreme PSNR is 44.77 and furthermore comparable esteems achieved in different images. Total difference in all algorithms the mean value is 2.35% at that point Figure 8 demonstrates the MSE esteem examination in all systems least error value is 2.82 in MHE-OGWO forms.

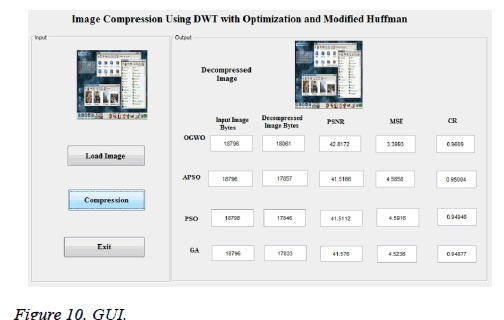

Compression and decompression time indicates the time taken for the algorithm to play out the encoding and decoding algorithm appeared in Tables 2 and 3. MHE algorithm is to compress the image after this compacted image is exchanged to encrypt the image. The encoded image is compared with the existing methodologies like PSO, APSO, and Genetic Algorithm (GA). By the comparison proposed model OGWO attains the optimal value.

| Techniques | Image 1 | Image 2 | Image 3 | Image 4 | Image 5 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| MSE | PSNR | MSE | PSNR | MSE | PSNR | MSE | PSNR | MSE | PSNR | |

| PSO | 4.31 | 41.78 | 3.87 | 42.25 | 4.63 | 41.47 | 5.12 | 41.04 | 3.87 | 42.25 |

| APSO | 4.31 | 41.55 | 3.87 | 42.26 | 4.26 | 41.84 | 4.63 | 41.48 | 3.64 | 42.52 |

| GA | 3.74 | 42.4 | 4.56 | 41.54 | 5.06 | 41.09 | 4.52 | 41.58 | 3.56 | 42.62 |

| OGWO-proposed | 2.75 | 43.73 | 3.4 | 42.56 | 2.2 | 44.71 | 2.68 | 43.69 | 3.22 | 43.06 |

Table 2. Optimization model based metrics.

| Images | Image 1 | Image 2 | Image 3 | Image 4 | Image 5 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Techniques | CS | CR | CS | CR | CS | CR | CS | CR | CS | CR |

| Run length coding | 13185 | 0.95 | 16715 | 0.96 | 14876 | 0.98 | 16715 | 0.96 | 14793 | 0.97 |

| Huffman Coding | 14805 | 0.97 | 12314 | 0.95 | 13193 | 0.95 | 14942 | 1.05 | 17846 | 0.95 |

| Merging based Huffman coding | 12575 | 0.97 | 16759 | 0.97 | 16832 | 0.97 | 14855 | 0.97 | 14729 | 1.03 |

Table 3. Encoding based metrics.

Figure 10 clarifies around one group of input guidelines of the strategy on the introduction framework is performed in the MATLAB programming determined on the comparative graph. This figure outlines the information image, decompressed images by PSNR MSE and CR in differing MHE-OGWO.

Conclusion

Image Compression is a noteworthy component in reducing the broadcast as well as capacity cost. The whole image compression frameworks are useful in their related fields and everyday novel compression framework is rising, which outfit retrieved compression ratio. Proposed strategy can be utilized as a base model for the study. DWT based strategies are smidgen speedier than DCT based algorithms in light of the fact that there is no compelling reason to determine the image into square blocks. Additionally, Harr Wavelet has been utilized as a part of a request to make execution as simple and furthermore consider OGWO to enhance the coefficient for harr values. There is less computational difficulty with Haar Wavelet transform. Utilization of MHE is very legitimate decision since it compresses long keep running of excess images effectively. Proposed scheme accomplishes the 97.2 in CR, 44.23 in PSNR and 2.25 in MSE achieved in the MHEOGWO procedure. Compared to existing methods the proposed model compress the image with respect the PSNR value as optimally. The variations between proposed and existing models achieve 0.437% based on PSNR value. In future optimize DCT coefficients esteems to compress the images.

References

- Kumar M, Vaish A. Color image encryption using MSVD, DWT and Arnold transform in fractional Fourier domain. J Light Electron Optic 2016; 1-24.

- Emmanuel BS, Mu'azu MB, Sani SM, Garba S. A review of wavelet-based image processing methods for fingerprint compression in the biometric application. Brit J Mathematics Comp Sci 2014; 4: 1-18.

- King GG, Christopher CS, Singh NA. Compound image compression using the parallel Lempel-Ziv-Welch algorithm. IEEE 2013; 1-5.

- Banerjee A, Halder A. An efficient dynamic image compression algorithm based on block optimization, byte compression and run-length encoding along Y-axis. In Computer Science and Information Technology (ICCSIT) 2010; 8: 356-360.

- Kuruvilla AM. Tiled image container for web compatible compound image compression. IEEE 2012; 182-187.

- Jindal P, Kaur RB. Various approaches of colored image compression using Pollination Based Optimization. Int J Adv Res Comp Sci 2014; 7: 129-132.

- Jeyanthiand PS. A survey on medical images and compression techniques. J Adv Sci Eng Technol 2016; 4: 6-10.

- Kaur R, Kaur M. DWT based hybrid image compression for smooth internet transfers. J Eng Comp Sci 2014; 3: 9447-9449.

- Amin B, Patel A. Vector quantization based lossy image compression using wavelets-a review. J Innov Res Sci Eng Technol 2014; 3: 10517-10523.

- Lan C, Xu J, Zeng W, Wu F. Compound image compression using lossless and lossy LZMA in HEVC. In Multimedia and Expo (ICME). IEEE International Conference 2015; 1-6.

- Kaur P, Parminder S. A review of various image compression techniques. J Comp Sci Mobile Computing 2015; 4: 1-8.

- Chen DK, Han JQ. Design optimization of DWT structure for implementation of image compression techniques. In Wavelet Analysis and Pattern Recognition, 2008 (ICWAPR'08). IEEE International Conference 2008; 1: 141-145.

- Raghuwanshi P, Jain A. A review of image compression based on wavelet transforms function and structure optimization technique. Int J Comp Technol Appl 2013; 4: 527-532.

- Karras DA. Improved compression of MRSI images involving the discrete wavelet transform and an integrated two level restoration methodology comparing different textural and optimization schemes. IEEE 2013; 178-183.

- Senthilkumaran N, Vinodhini M. Comparison of image compression techniques for MRI brain image. J Eng Trends Appl 2017; 4.

- Karras DA. Efficient medical image compression/reconstruction applying the discrete wavelet transform on texturally clustered regions. IEEE 2005; 87-91.

- Ansari IA, Pant M. Multipurpose image watermarking in the domain of DWT based on SVD and ABC. Pattern Recognition Lett 2017; 94: 228-236.

- Gopal J, Gupta G, Gupta KL. An advanced comparison approach with RLE for image compression. J Adv Res Comp Sci Software Eng 2014; 4: 95-99.

- Hu G, Xiao D, Wang Y, Xiang T. An image coding scheme using parallel compressive sensing for simultaneous compression-encryption applications. J Visual Communication Image Representation 2017; 44: 116-127.

- Mishra A, Agarwal C, Sharma A, Bedi P. Optimized gray-scale image watermarking using DWT-SVD and Firefly algorithm. Expert Systems with Applications 2014; 41: 7858-7867.

- Zhang Y, Xu B, Zhou N. A novel image compression-encryption hybrid algorithm based on the analysis sparse representation. Optics Communications 2017; 392: 223-233.

- Ziran P, Guojun W, Jiang H, Shuangwu M. Research and improvement of ECG compression algorithm based on EZW. Comp Methods Programs Biomed 2017; 145: 157-166.

- Karimi N, Samavi S, Soroushmehr SR, Shirani S, Najarian K. Toward practical guideline for the design of image compression algorithms for biomedical applications. Expert Systems with Applications 2016; 56: 360-367.

- Chang BM, Tsai HH, Yen CY. SVM-PSO based rotation-invariant image texture classification in SVD and DWT domains. Engineering Applications of Artificial Intelligence 2016; 52: 96-107.

- Turcza P, Duplaga M. Near-lossless energy-efficient image compression algorithm for wireless capsule endoscopy. Biomed Signal Processing Control 2017; 8: 1-8.