ISSN: 0970-938X (Print) | 0976-1683 (Electronic)

Biomedical Research

An International Journal of Medical Sciences

Research Article - Biomedical Research (2017) Volume 28, Issue 20

Modified emotion recognition system to study the emotion cues through thermal facial analysis

Priya MS1,2* and Kadhar Nawaz GM3

1Research Scholar, Department of Computer Science, Bharathiar University, Coimbatore, India/p>

2St.Anne's FGCW, Bengaluru, India

3Associate Lecturer, Department of Computer Applications, Sona College of Technology, Salem, India

- *Corresponding Author:

- Priya MS

Research Scholar Department of Computer Science Bharathiar University India

Accepted date: September 22, 2017

Recognition of facial expression is a potential application in emotion analysis. This research is mainly focused to study the emotion cues hidden in human face. Even a slightest anxiety or excitement can trigger a warm spread across the cheeks. Similarly, face temperature plummets when a person is shaken by a loud noise. Thus thermal facial images are used in this research to analyze the emotions using the heat map spread across the face. The recognition system is framed and designed on the concept of visible spectrum. The new concept greatly affects the recognition method to be effective on poorly illuminated environments. In this proposed method which is called as the Modified Thermal Emotion Recognition we use the Eigen face technique which is of great help when it comes to a large database of faces. We also use PCA-principal component analysis with Eigen face because of its simplicity, speed and learning capability. The PCA technique helps in efficiently representing pictures of faces along with the Eigen face technique. Once the weights are derived from the original images, ADA Boost algorithm is applied, which helps to reduce huge image database. ADA Boost simplifies and reduces the number of weights and helps in easy calculation process. SIFT and GLCM algorithm is used to extract the features from the image by training the database.

Keywords

Emotion recognition, Thermal images, Eigen face, ADA boost, SIFT, GLCM, FFNN.

Introduction

Emotional intelligence relates to facial expressions. On the current scenario there is little literature works on solving the FER problem in thermal images. The major drawbacks in facial analysis are texture, disturbance caused by glasses and the areas that do not represent emotions. The advancement in exclusive and minutiae technology has lead to the exploration of abstract and qualitative object like emotions.

Manual analysis of human emotions completely depends on the hand of expert. This type of method has faults in the accuracy of the result and the report is on average. There is another technique which is available called the polygraph examination. The accuracy rate is 90% but the main disadvantage is that it needs human cooperation and is a very long process to perform. It consumes tremendous amount of time to deliver the outcome. Hence in this paper we discuss about the thermal imaging process. This process uses the facial expressions and it does not need the cooperation of humans. The results delivered by this process are quick. Thermal imaging is based on the concept of heat radiation generated in the facial skin. Thermal images are used for both instantaneous and sustained stress conditions as these are the vital conditions to bring changes in blood flow. The blood flow changes generate heat in the facial texture. These heat changes help to detect and process the thermal imaging process. In this paper we discuss on the thermal facial analysis to recognize the status of the person. The novelty of this paper gives way for future works. The proposed algorithm has wide application in the medicine industry to monitor the health aspect of human beings.

Applications of thermal facial analysis

Thermal facial analysis allows non-contact, non-invasive investigations on human emotion cues. Many researchers have concluded that the concealed information test [1] is more appropriate for testing psychopathic offenders who demonstrate a lack of emotion. Extracting the forehead thermal signature after a mock crime scenario has given a promising success rate in lie detection [2]. Thermal cameras can capture the facial skin temperature, which helps to classify cognitive workload [3] and anxiety detection [4] can be done by measuring the breathing rate along with the facial skin temperature.

Proposed Methodology - Modified Thermal Emotion Recognition

The proposed method consists of four major stages which include:

• Eigenvectors, Eigen Values and PCA

• ADA Boost Algorithm

• SIFT and GLCM Feature Extraction

• FFNN-Feed forward neural networks

The Eigen face along with PCA converts the image to feasible size. ADA Boost simplifies the complex Eigen image. SIFT and GLCM derives important features from the image and FFNN-Feed forward neural networks helps to recognize the image from the database.

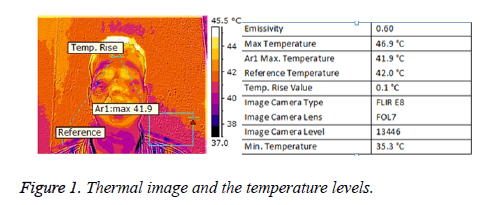

Face library

In this proposed method we have used a real time face library since it’s free from noise and easy to use. The face library was collected with FLIR E8. This Thermal camera has an IR Pixel Resolution 320 × 240 with thermal sensitivity<0.06°C and temperature range of -20 to 250°C. The training images that are loaded are converted into vector format data and converted into a data file with image vector and a class number to differentiate the images (Figure 1).

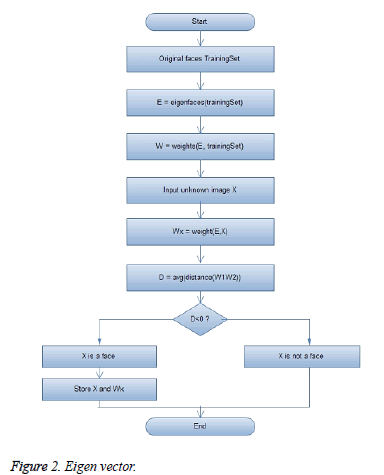

Eigenvectors, eigen values and PCA

Initially the eigen values are used for face recognition along with PCA (Figure 2). This involves the below steps:

1. Consider the original training image as [x1,x2,…xn]

2. Average of the training set image is calculated.

μx=(x1x2,,,,xn)/n → (1)

3. The mean value is subtracted from each face.

X=xi-μx, where i=1,2,…,n

This subtraction plays an important role to ensure the first principal component conveys direction of maximum variance.

4. After application of PCA now we derive a new set of Eigen images as [x1y1,x2y2,…,xnyn] which is derived from X.

ADA boost algorithm

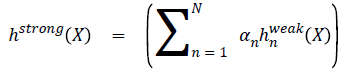

Boosting is a technique used to improve the accuracy of any algorithm. It helps in creating a strong algorithm [5,6]. Now we are going to apply the ADA Boost algorithm on the derived eigen vector [7].

1. Consider the final set derived from eigen vectors [a1b1,a2b2,…,anbn].

2. Let ai RP be a sample from the set and bi the corresponding label

3. Weight distribution for the above set as p(ai)=1/L

4. In every application of the boosting technique a new weak classifier, hnweak(X), where X → [-1,+1], and an (weight) is manipulated.

5. Probability p(a) is calculated and updated in such a way that the samples with misclassified are more in number than the set that are correctly classified. Hence the boosting is focused on the difficult examples.

6. The iteration continues and results with another weak classifier until the specified number of weak classifiers is attainted.

7. A strong classifier is obtained, hstrong(X) which is a linear combination of set of N weak classifiers hnweak(X):

-> (2)

-> (2)

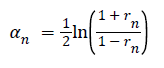

The weight αn,

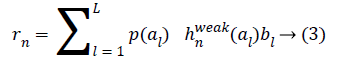

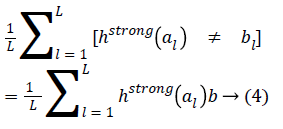

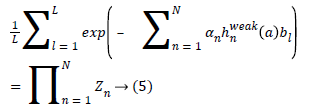

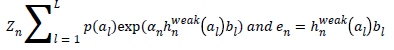

8. Now to show that the error is reduced with the help of boosting algorithm, let’s consider

9. The error is bounded with

Where,

In each round n, Zn is reduced which shows that the boosting minimizes the training error. The motto of applying boosting is to make each feature correspond to a weak classifier, for which boosting helps to select the strong subset from the features. The features from all iteration are evaluated for positive and negative training samples after which a hypothesis is generated. Now with the application of the boosting algorithm, the best hypothesis that forms the weak classifier hn weak is selected. Hence the feature selection depends on the error en.

SIFT and GLCM feature extraction

The most challenging aspect of thermal image recognition is the retrieval system. Many researchers have focused on producing robust and informative representations in order to achieve high retrieval accuracy [8]. There are many applications available to retrieve the similar face from the dataset. The testing image is provided as an input to the system to remove the noises from the images. After which the features are extracted from the face image using the SIFT and GLCM algorithm [9]. The database is trained with the extraction of features from all the images in the database. Now the test image features and the training image features are sent to the KNN classifier. The classifier checks for the faces in the database. The similar face image is retrieved from the database [10].

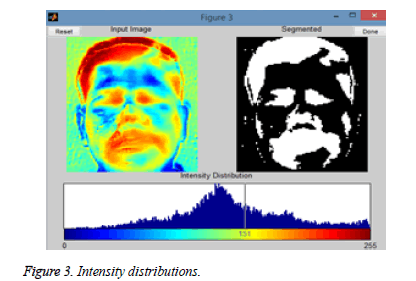

SIFT-the scale invariant feature transform: SIFT helps in detecting the local features in the image. When a face image is provided as an input the SIFT algorithm extracts the eyes, nose and lips for a detailed description of the image. These descriptions which are extracted from the training image are used in identifying the object from the test images [11]. In order to improve the quality of the results, it is necessary that the features in the training image should be detectable in the presence of noise and illumination. This is achievable only when the object edges of the high contrast regions are considered (Figure 3).

Another point to be considered is the relative positions between the features in the original image should not change from one image to another. Initially, the extreme points are calculated, after which the points are localized and the nearby points are interpolated [12].

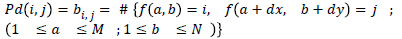

GLCM feature extraction: Gray Level Co-occurrence matrix is meant for holding pixel and its position details. Consider Pd [i,j] where d=(dx, dy). Now all the pair of pixels with distances d and with gray levels i and j are considered. The pair of pixels with i as the first pixel value and its matching pixel as j are stored in the ith row and jth column in the matrix Pd[i,j].

So GLCM is defined as,

bi,j, represents the number of times the pixel value (i,j) occurred in the image with distance d. b × b is the dimension of the co-occurrence matrix with b the number of gray levels. From the above co-occurrence matrix, the 12 different statistical features will be extracted [13].

FFNN-feed forward neural networks

The Thermal Face Recognition System (TFRS) has two major partitions. First comes the image processing and later the classification. The first component namely the image processing comprises of thermal image and extraction of features like eyes, nose and mouth which is done through eigen faces. The second part is made up of the feed forward neural network, therefore starts with recognition of thermal image and ideally lands up with the classification.

In this proposed method, we have described the method to recognize the emotions from the extracted facial features and then neural networks are applied.

The pre-process of neural network: The pre-processing of the extracted features are then fed as an input. The extracted features are then resized according to the requirement. In this method the eye is resized to 28 × 20 and mouth block to 20 × 32. The 2-D matrices are transformed into 1-D vectors so it can be placed to form a single column. Now we have 560 × 1 column vectors for the eye block and 640 × 1 column vectors for mouth vector. Together both the column vectors of eye mouth turn to be a 1200 × 1 column vector. This will be delivered as an input to the neural network [14].

Training algorithm and network architecture: Multilayer feed forward network is used here. With several trials we have chosen the three layer feed forward network with one output layer and two hidden layer. Back-propagation training algorithm is used due to this simplicity and efficiency.

Training samples and network simulation: In order to train the network to identify the various emotions, different images are used. Our own database of images is used for this. Some sample faces used for this training are shown below (Figure 4).

The eye and mouth blocks are extracted from the gray image and used for training. The training is provided with the samples of various emotions. After training the network it is being analyzed using test samples (Figure 5).

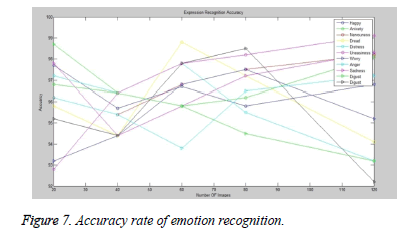

Experimental Results

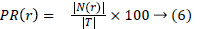

If the comparison finds a suitable image or a threshold match then it is classified as a Known face else Unknown face. When it is a known face, the personal information is then retrieved from the database else, an error message stating Match not found is displayed [15-21]. The obtained recognition rates and their accuracy are demonstrated. The below table demonstrates results using Cumulative Match Characteristic (CMC) metric defined by following equation

Here, N(r) represents the set of testing image recognized correctly in T. The same is represented in Figure 5 in a graphical comparison model according to the reading provided in Table 1.

| Emotions | Percentage of Recognition |

|---|---|

| Anxiety | 98.2 |

| Nervousness | 98.1 |

| Dread | 98.9 |

| Distress | 97.9 |

| Uneasiness | 98.3 |

| Worry | 97.6 |

| Angry | 98.4 |

| Sadness | 98.8 |

| Fear | 95.3 |

| Happiness | 97.8 |

| Disgust | 94.4 |

Table 1: Output of the proposed MTER system.

• Conventional classifiers without the neural models decrease upto 17.9%.

• It takes 0.0357 s to process a testing image. The recognition ratio can highly be improved if negative training is provided to the network along with neutral state.

• It is concluded that the Modified Thermal Emotion Recognition for emotion recognition is more robust than using a direct emotion classification approach.

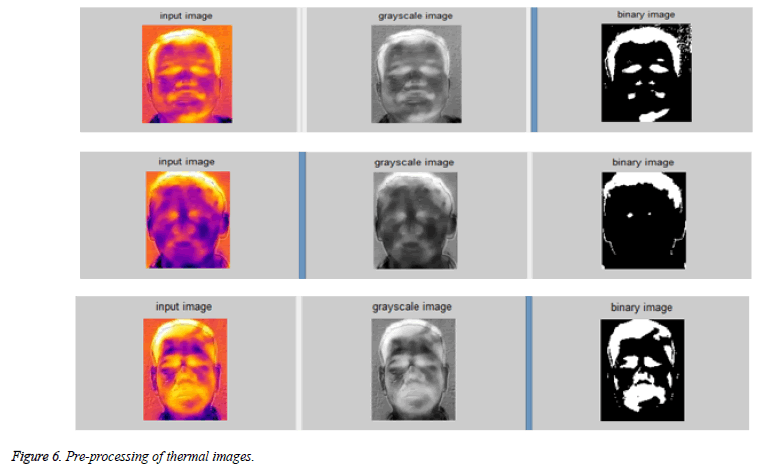

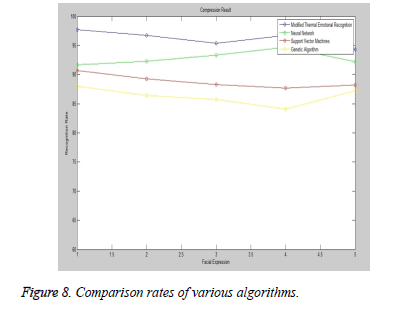

It is observed that the results of recognition rates can be improved with the help of negative training. It can deliver better results even with a neutral state trained network (Figures 6-8).

Conclusion and Future Work

The results are favorable with the set of images that are used in research work. This research was mainly focused on identifying the true emotions, since human can hide their expressions and stay neutral. Since we are identifying the emotions by studying the heat map on human face, this research can be used to scan the people, even without their knowledge, thus useful in security zones. The challenge here is occlusion caused by hair, spectacles, natural temperature variation caused in human due to his metabolism. Our future work is to apply the method on complex background images with uneven illumination and reduce false detections.

References

- Pollina DA, Dollins AB, Senter SM, Brown TE, Pavlidis I, Levine JA, Ryan AH. Facial skin surface temperature during a concealed information test. Ann Biomedical Eng 2006.

- Zhu Z, Tsiamyrtzis P, Pavlidis I. Forehead thermal signature extraction in Lie Detection. Conf Proc IEEE Eng Med Biol Soc 2007; 2007: 243-246.

- Stemberger J, Allison RS, Schnell T. Thermal imaging as a way to classify cognitive workload. Proceedings of Canadian Conference on Computer and Robot Vision, Ottawa, 2010.

- Pavlidis I, Levine J, Baukol P. ThermalnImage Analysis for Anxiety Detection. Proceedings 2001 International Conference on Image Processing, Thessaloniki, 2001.

- Owusu E, Zhan Y, Mao QR. A Neural-AdaBoost based facial expression recognition system. Exp Syst Appl 2014; 41: 3383-3390.

- Hemalatha G, Sumathi CP. A study of techniques for facial detection and expression classification. Int J Comput Sci Eng Survey 2014; 5: 27-37.

- Kaur M, Vashisht R, Neeru N. Recognition of facial expressions with principal component analysis and singular value decomposition. Int J Compute Appl 2010; 9: 36-40.

- Bajaj N, Happy SL, Routray A. Dynamic model of facial expression recognition based on eigen-face approach. Proceed Green Energy Syst Conf 2013.

- Praseeda Lekshmi V, Sasikumar M. A neural network based facial expression analysis using gabor wavelets. Proceed World Acad Sci: Eng Technol 2008; 44: 593-597.

- Parveen P, Thuraisingham B. Face recognition using multiple classifiers. Proceedings of the 18th IEEE International Conference on Tools with Artificial Intelligence, 2006.

- Yang S, Bhanu B. Facial expression recognition using emotion avatar image. Face and Gesture 2011, Santa Barbara, 2011.

- Berretti S, Amor BB, Daoudi M, Bimbo AD. 3D facial expression recognition using SIFT descriptors of automatically detected keypoints. Visual Computer 2011; 27: 1021-1036.

- Sastry SS, Rao CN, Mallika K, Tiong HS, Mahalakshmi KB. Co-occurrence matrix and its statistical features as an approach for identification of phase transitions of mesogens. Int J Innovat Res Sci Eng Technol 2013.

- Abidin Z, Harjoko A. A neural network based facial expression recognition using fisherface. Int J Comput Appl 59: 30-34.

- Gosavi AP, Khot SR. Facial expression recognition using principal component analysis with singular value decomposition. Int J Adv Res Comput Sci Manag Stud 2013; 1: 158-162.

- Paithane AN, Hullyalkar S, Behera G, Sonakul N, Manmode A. Facial emotion detection using eigen faces. Int J Eng Comput Sci 2014; 3: 5714-5716.

- Punitha A, Kalaiselvi Geetha M. Texture based emotion recognition from facial expressions using support vector machine. Int J Comput Appl 2013.

- Lisetti CL, Rumelhart DE. Facial expression recognition using a neural network. Proceedings of the Eleventh International FLAIRS Conference, 1998.

- Vijayarani S, Priyatharsini S. Facial feature extraction based on FPD and GLCM algorithms. Int J Innovat Res Comput Commun Eng 2015; 3: 1514-1520.

- Thuseethan S, Kuhanesan S. Eigen face based recognition of emotion variant faces. Comput Eng Intell Syst 2014.

- https://arxiv.org/ftp/arxiv/papers/1105/1105.6014.pdf